Mike Lehmann

Marina Rost

Vera Schindler-Zins

The Coded Fairness Toolkit (digital and analogue).

Coded Fairness Project – Concept Overview

At the beginning of our thesis we explored the process of machine learning and occurring obstacles wich lead to biases, via Teachable Machine (https://teachablemachine.withgoogle.com)

Individual methods were tested remotely via miro in the form of user testing.

The "Your Persona" method in action.

The Coded Fairness Posters.

Coded Fairness Toolkit Poster Overview

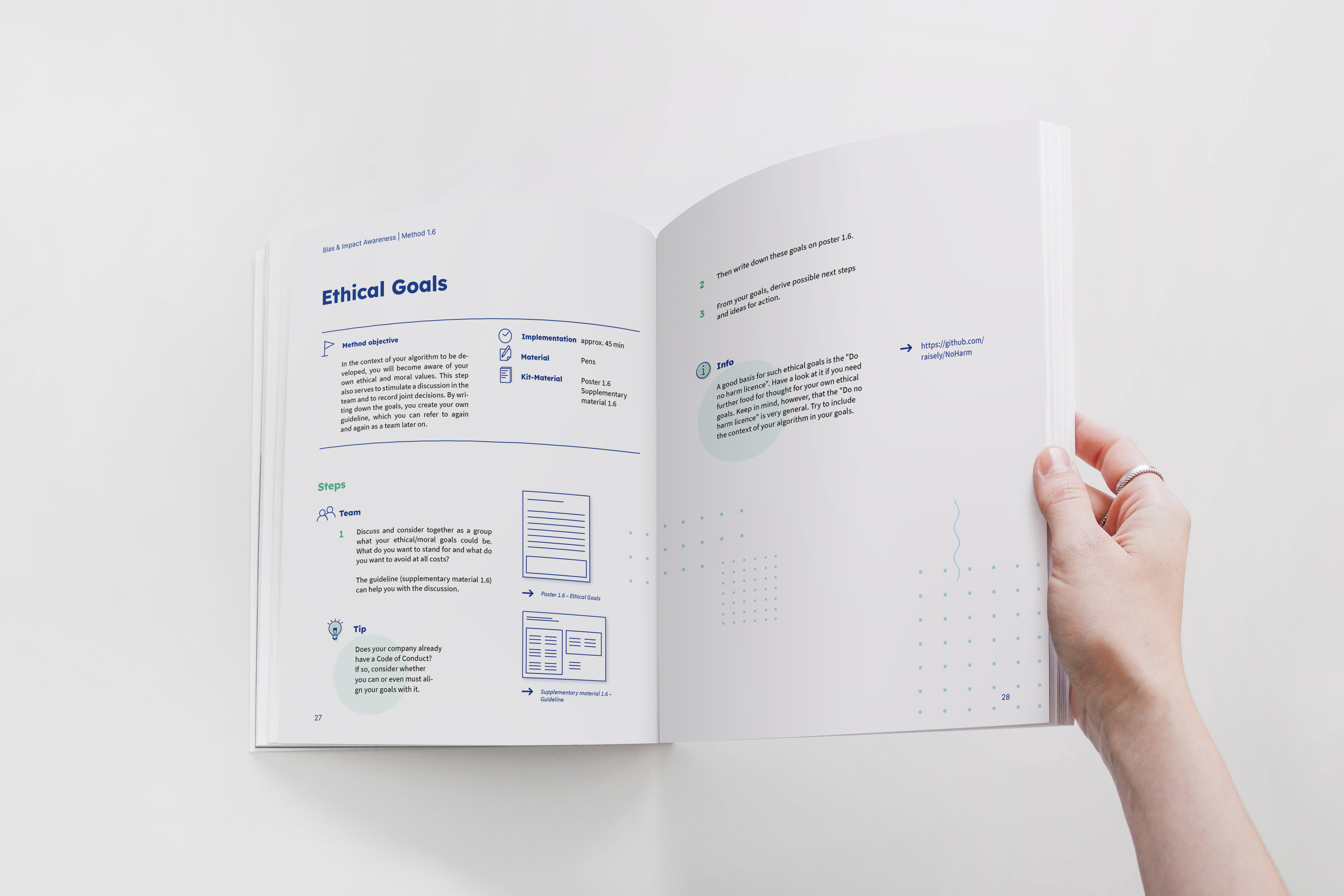

The Coded Fairness Toolkit Booklet which explains the methods and provides additional background information. (Magazine PSD Mockup – www.mrmockup.com)

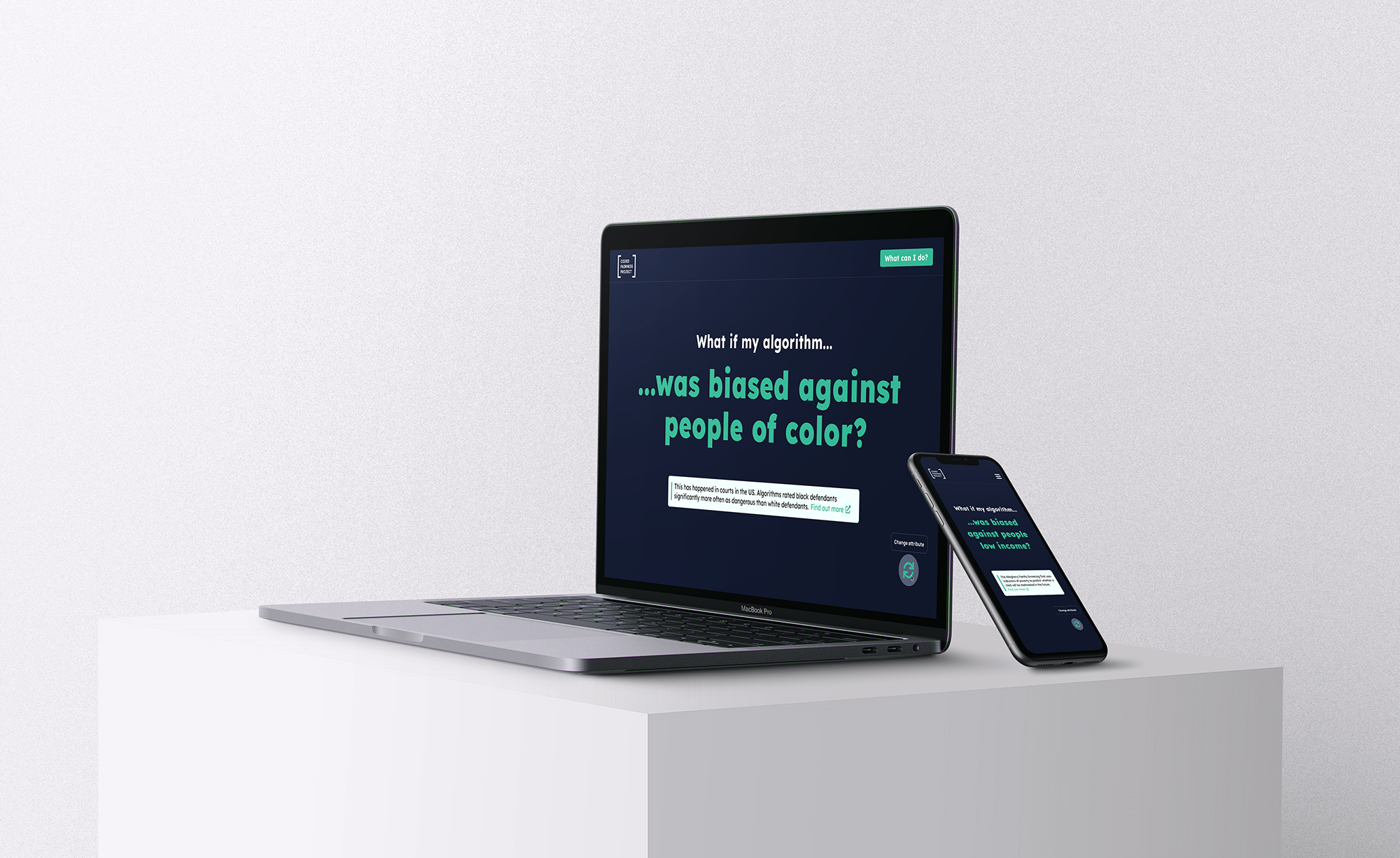

The website serves as a communication tool to show different examples of discrimination by algorithms as well as a product page. (Apple iPhone 11 & Macbook Pro Mockup (PSD) - www.unblast.com)

In the form of a certificate, companies are to be motivated to use our methods. (Flyer psd created by CosmoStudio - www.freepik.com)

Course

- Masterthesis

- Semester 3, Sommer 2021

Supervisor

Topics